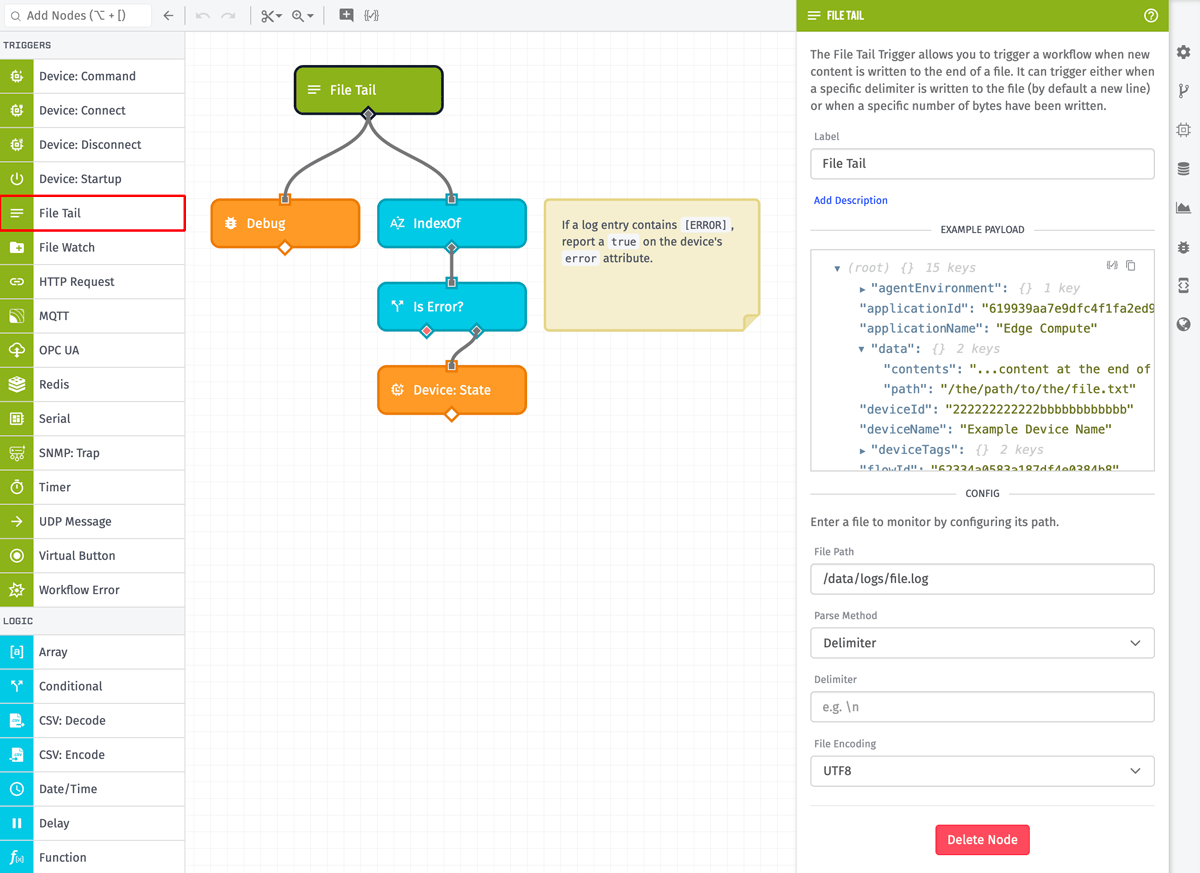

File Tail Trigger

The File Tail Trigger allows you to monitor and analyze logs and other files on the Gateway Edge Agent’s (GEA) container or host file system. This trigger monitors new data being written to a file and fires a workflow whenever a configured delimiter or byte length has been reached.

In most use cases, this node works similarly to the Linux tail command when using the -f (follow) option.

Node Properties

File Path

This property defines the full path of the file to tail (e.g. /data/logs/file.log). The GEA runs inside a Docker container, so this path is a location on the container’s file system. To access files located on the host file system, they must be mounted into the container using Docker volumes. For example:

docker run -v /var/log/file.log:/data/logs/file.log ...Invalid permissions are a common issue when mounting files from the host to the container. In most cases, files located on the host file system cannot be accessed by the GEA without first changing permissions. For example:

sudo chmod a+rwx /var/log/file.logYou can verify that the GEA has correct access to the file by attempting to manually tail the file from within the container. The example below indicates that the file has not been granted proper permissions.

$ docker exec -it CONTAINER_ID bash

# You're now inside the container

$ tail /data/logs/file.log

tail: cannot open '/data/logs/file.log' for reading: Permission deniedParse Method

As new data is being received from the configured file, the Parse Method property controls when to fire workflows. The available options are:

- Delimiter: Fire the workflow whenever the configured character or string is received.

- Byte Length: Fire the workflow whenever the configured number of bytes has been received.

Delimiter

When Parse Method is set to Delimiter, this property defines the character or string, that when received, will fire the workflow. The delimiter will not be included in the file content on the resulting payload. If no value is entered, the delimiter defaults to \n (line feed). When tailing log files, the most common delimiters are:

\n(line feed): The newline character for Unix/Mac OS X.\r\n(carriage return + line feed): The newline character for Windows.\r(carriage return): The newline character for Max OS before X.\t(tab)\0(null character)

Unlike most fields in the workflow editor, special characters entered here are automatically unescaped. This means \n is interpreted as a line feed character instead of the literal string "\n". In the unlikely scenario that you do require the literal string version of a special character, you can do so by adding an extra backslash (e.g. \\n or \\r\\n).

The delimiter field supports strings up to 16 characters in length. For example, if messages in your file are not delimited by a single newline character, but are instead delimited by a multi-character string (e.g. NEW_LOG_MSG), you can enter that string in this field.

Delimiter Memory Considerations

All file data received between delimiters is buffered in memory. There is no limit to the amount of data that will be buffered while waiting for the delimiter string. If your scenario involves files that receive a significant amount of data between delimiters, this may have a negative impact on available system memory. In extreme cases, the host system can run out of memory, which may result in the GEA process being closed by the host operating system. For optimal payload size and GEA performance, the recommended maximum amount of buffered data between delimiters is 5MB.

Byte Length

When Parse Method is set to Byte Length, this property defines the number of bytes that must be received before firing the workflow. This option is commonly used to monitor files that contain binary data instead of text data.

Accessing and Parsing Binary Data

When using the Byte Length option, the most common next step is to parse the raw binary data into meaningful values. First, the recommended Output Encoding is Base64, which results in the payload receiving your binary data in a format that’s easier to inspect and debug. There are several online Base64 decoders that you can use to inspect the data by copying the value from the debug output. The Binary encoding option also works, however, this results in debug output that can be less helpful.

Accessing the underlying byte data in your workflow requires the use of a Function Node and the Buffer Object. For example:

let buffer = Buffer.from(payload.data.contents, 'base64');

// let buffer = Buffer.from(payload.data.content, 'binary');

payload.working ||= {};

payload.working.parsed = {

firstByte: buffer[0],

nextInteger: buffer.readInt32LE(1),

nextFloat: buffer.readFloatLE(5)

};Output Encoding

The Output Encoding property defines how the received data should be encoded before it’s placed on the payload. For text-based log files, the most common encoding is UTF-8, which is also the default.

If you select Binary as the encoding option, the data will be added to the payload as a binary-encoded string. See Accessing and Parsing Binary Data for details on how to access the underlying byte data.

When viewing the file content in the debug output, the data will be UTF-8-encoded for presentation purposes. This can sometimes lead to confusion since the debug output may not visually reflect the underlying payload data. This is especially true when selecting Binary as the encoding since the debug output will display the data as a UTF-8-encoded string, which often looks like a series of random characters. The encoding process for the debug output has no impact on the underlying payload data.

Payload

The trigger’s initial payload contains a data object with the file path and new file content. The content property is always a string that’s encoded based on your selected Output Encoding.

{

"agentEnvironment": {

"EXAMPLE": "Environment Variable"

},

"agentVersion": "1.31.0",

"applicationId": "555555555555eeeeeeeeeeee",

"applicationName": "My Great Application",

"data": {

"content": "<new file content as encoded string>",

"path": "<path to file>"

},

"device": {

"applicationId": "555555555555eeeeeeeeeeee",

"attributes": {

"exampleNumber": {

"attributeTags": {

"attrTagKey": "tagValue1"

},

"dataType": "number",

"name": "exampleNumber"

},

"exampleString": {

"attributeTags": {

"attrTagKey": "tagValue2"

},

"dataType": "string",

"name": "exampleString"

}

},

"creationDate": "2018-03-16T18:19:03.376Z",

"deviceClass": "edgeCompute",

"deviceId": "222222222222bbbbbbbbbbbb",

"id": "222222222222bbbbbbbbbbbb",

"name": "My Great Device",

"tags": {

"aTagKey": [

"exampleTagValue"

],

"tagWithMultipleValues": [

"tagValue",

"anotherTagValue"

]

}

},

"deviceId": "222222222222bbbbbbbbbbbb",

"deviceName": "My Great Device",

"deviceTags": {

"aTagKey": [

"exampleTagValue"

],

"tagWithMultipleValues": [

"tagValue",

"anotherTagValue"

]

},

"flowId": "333333333333cccccccccccc",

"flowName": "My Great Workflow",

"flowVersion": "myFlowVersion",

"globals": {

"aJsonGlobal": {

"key": "value"

},

"aNumberGlobal": 42,

"aStringGlobal": "My value"

},

"isConnectedToLosant": true,

"time": Fri Feb 19 2016 17:26:00 GMT-0500 (EST),

"triggerId": "111111111111aaaaaaaaaaaa",

"triggerType": "fileTail"

}Related Nodes

Was this page helpful?

Still looking for help? You can also search the Losant Forums or submit your question there.